Get results after completion

You can get results for various levels of processing

Description of the results

The API provides advanced audio analysis capabilities, offering a range of predictions and insights about spoken content. It supports tasks such as speaker diarization, automatic speech recognition (ASR), language identification, and various behavioral and vocal attributes. It assesses gender and age range or speaker, emotion, arousal and positivity of speech, as well as speaking rate and levels of hesitation. Engagement and politeness levels are also measured, offering nuanced insights into the speaker's interaction style. Each task returns detailed predictions with confidence scores, enabling comprehensive analysis and understanding of audio data. This rich set of features supports applications in fields like customer service, content analysis, and behavioral research.

The following is the list of attributes that the API calculates:

-

Speaker Diarization:

- Identifies utterances of different speakers within an audio clip.

- Labels each segment with a unique speaker identifier.

- Marks the start and end time of each speaker turn

-

Automatic Speech Recognition (ASR):

- Transcribes spoken words into text.

- Provides accurate textual representation of the audio content.

-

Language Identification:

- Determines the language used in the audio.

- Returns language labels with confidence scores for multiple languages.

-

Emotion Detection:

- Analyzes the emotional tone of the speech.

- Classifies emotions such as "angry," "neutral," "happy," and "sad."

-

Strength Detection:

- Analyzes the strength level of the speech.

- The levels are: "weak", "neutral" and "strong"

-

Gender and Age Prediction:

- Assesses the speaker's gender and age range.

- Provides confidence scores and dominant segments for each prediction.

-

Positivity Analysis:

- Evaluates the overall positivity of the speech.

- Classifies speech as "positive," "neutral," or "negative."

-

Speaking Rate:

- Measures the rate of speech.

- Labels the speech as "slow," "normal," "fast," or variations thereof.

-

Hesitation Detection:

- Identifies moments of hesitation in speech.

- Classifies segments with "yes" or "no" for hesitation.

-

Engagement Assessment:

- Analyzes the level of engagement of the speaker.

- Classifies engagement as "engaged," "neutral," or "withdrawn."

-

Politeness Evaluation:

- Measures the politeness level of the speech.

- Labels speech as "polite," "neutral," or "rude."

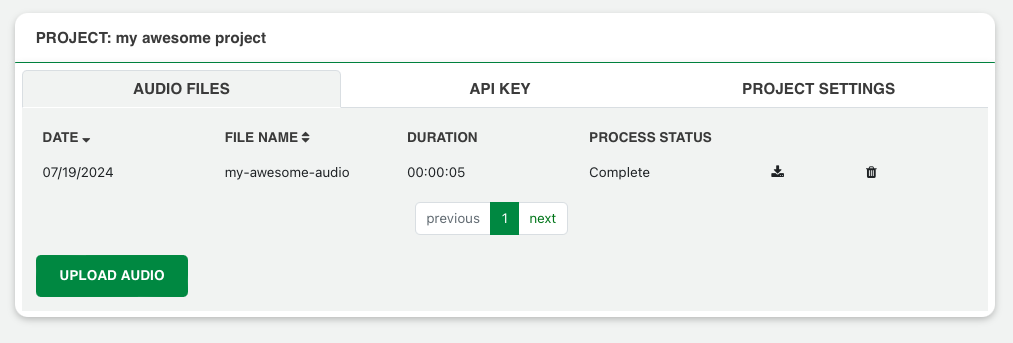

Retrieving the results - using the UI

Once the audio has been processed, you can click on the download button to get the JSON results.

Retrieving the results - using the API

Once the status of your job changes to “2: completed” you can send a GET results request, using the unique pid, that returns a media type/JSON containing the results.

curl --location 'https://api.behavioralsignals.com/v5/clients/<your-project-id>/process/<pid>/result' \

--header 'X-Auth-Token: <yout-api-token>'

Example response:

[

{

"endTime": "4.67",

"finalLabel": "SPEAKER_00",

"id": "0",

"level": "utterance",

"prediction": [

{

"label": "SPEAKER_00"

}

],

"startTime": "0.069",

"task": "diarization"

},

{

"endTime": "4.67",

"finalLabel": " Life will give you whatever experience is most helpful for the evolution of your consciousness.",

"id": "0",

"level": "utterance",

"prediction": [

{

"label": " Life will give you whatever experience is most helpful for the evolution of your consciousness."

}

],

"startTime": "0.069",

"task": "asr"

},

{

"endTime": "4.67",

"finalLabel": "en",

"id": "0",

"level": "utterance",

"prediction": [

{

"label": "en",

"posterior": "0.9970703125"

},

{

"label": "la",

"posterior": "0.0005102157592773438"

},

{

"label": "zh",

"posterior": "0.0003731250762939453"

}

],

"startTime": "0.069",

"task": "language"

},

{

"endTime": "4.67",

"id": "0",

"level": "utterance",

"prediction": [

{

}

],

"startTime": "0.069",

"task": "features"

},

{

"endTime": "4.67",

"finalLabel": "female",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0,

1,

2

],

"label": "female",

"posterior": "0.9555"

},

{

"label": "male",

"posterior": "0.0445"

}

],

"startTime": "0.069",

"task": "gender"

},

{

"endTime": "4.67",

"finalLabel": "46 - 65",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

1,

2

],

"label": "46 - 65",

"posterior": "0.5991"

},

{

"dominantInSegments": [

0

],

"label": "18 - 22",

"posterior": "0.3442"

},

{

"label": "31 - 45",

"posterior": "0.0567"

},

{

"label": "23 - 30",

"posterior": "0.0"

}

],

"startTime": "0.069",

"task": "age"

},

{

"endTime": "4.67",

"finalLabel": "angry",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0

],

"label": "angry",

"posterior": "0.3789"

},

{

"dominantInSegments": [

1,

2

],

"label": "neutral",

"posterior": "0.3691"

},

{

"label": "happy",

"posterior": "0.2325"

},

{

"label": "sad",

"posterior": "0.0196"

}

],

"startTime": "0.069",

"task": "emotion"

},

{

"endTime": "4.67",

"finalLabel": "neutral",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0,

1,

2

],

"label": "neutral",

"posterior": "0.5427"

},

{

"label": "negative",

"posterior": "0.3294"

},

{

"label": "positive",

"posterior": "0.128"

}

],

"startTime": "0.069",

"task": "positivity"

},

{

"endTime": "4.67",

"finalLabel": "neutral",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0,

1

],

"label": "neutral",

"posterior": "0.5839"

},

{

"dominantInSegments": [

2

],

"label": "strong",

"posterior": "0.3992"

},

{

"label": "weak",

"posterior": "0.0169"

}

],

"startTime": "0.069",

"task": "strength"

},

{

"endTime": "4.67",

"finalLabel": "fast",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

1,

2

],

"label": "fast",

"posterior": "0.5784"

},

{

"dominantInSegments": [

0

],

"label": "normal",

"posterior": "0.3864"

},

{

"label": "slow",

"posterior": "0.0351"

},

{

"label": "very slow",

"posterior": "0.0"

},

{

"label": "very fast",

"posterior": "0.0"

}

],

"startTime": "0.069",

"task": "speaking_rate"

},

{

"endTime": "4.67",

"finalLabel": "no",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0,

1,

2

],

"label": "no",

"posterior": "0.9661"

},

{

"label": "yes",

"posterior": "0.0339"

}

],

"startTime": "0.069",

"task": "hesitation"

},

{

"endTime": "4.67",

"finalLabel": "engaged",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

0,

1,

2

],

"label": "engaged",

"posterior": "0.8753"

},

{

"label": "neutral",

"posterior": "0.1239"

},

{

"label": "withdrawn",

"posterior": "0.0008"

}

],

"startTime": "0.069",

"task": "engagement"

},

{

"endTime": "4.67",

"finalLabel": "polite",

"id": "0",

"level": "utterance",

"prediction": [

{

"dominantInSegments": [

2

],

"label": "polite",

"posterior": "0.3999"

},

{

"dominantInSegments": [

0,

1

],

"label": "neutral",

"posterior": "0.3601"

},

{

"label": "rude",

"posterior": "0.24"

}

],

"startTime": "0.069",

"task": "politeness"

}

]

Updated 7 months ago